The answer is—it didn’t. Over the last three years (2022-2025) AI has become a buzzword that marketers love to throw around, and that buzzword keeps evolving. In reality, AI’s growth is a combination of decades of technological advancements, many of which have gone through their own hype cycles.

Take Machine Learning, for example. In the 2010s, it was the must-have buzzword—every product had to be built with it or feature some form of machine learning to stay relevant. But the term itself has been around since the 1950s. The same goes for Neural Networks, which surged in popularity during the Deep Learning boom though it originated decades earlier. This boom gave rise of the buzzword Big Data.. Since the release of ChatGPT in 2022, which OpenAI spent seven years developing, the hype has shifted and new buzzwords are being used. Today, it’s all about LLMs (Large Language Models), Generative AI, Conversational AI, Modern AI, or simply AI. These terms dominate marketing materials, investor pitches, and product descriptions—often without much understanding of what they actually mean.

Another emerging buzzword is hallucinations—a brand-new term that only started appearing in AI discussions with the rise of LLMs. It refers to when an AI confidently generates incorrect or misleading information. The only time you’ll hear this term in marketing? When a company is trying to convince you that their AI model has “fewer hallucinations” than the competition.

The Simple Fact of the Matter

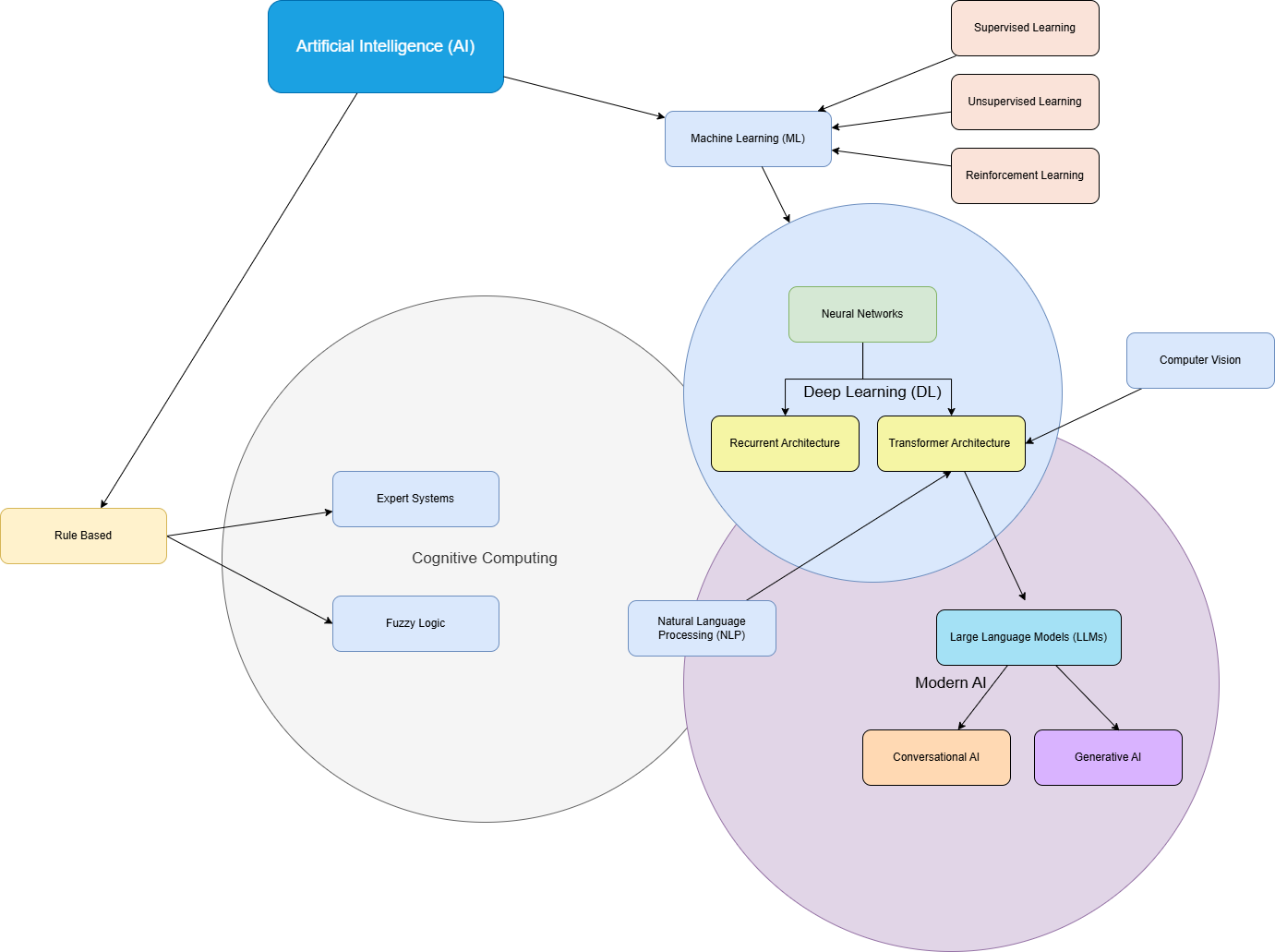

The reality is that there is not just one buzzword, nor is there a singular technology driving AI forward. AI is an umbrella term that encompasses a vast range of fields and techniques, each playing a role in its overall progress. And at the core of all of them? Algorithms.

Algorithm is a buzzword that keeps resurfacing, and for a good reason—because it’s the foundation of all AI advancements. Whether it’s machine learning, neural networks, NLP, deep learning, or even fuzzy logic, they are all just different types of algorithms designed to process data in specific ways.

AI is built on mathematical models, probability, statistics, optimization techniques, and computer architectures that enable it to learn from patterns and make decisions. AI isn’t magic—it’s just mathematics. Multiple technologies, each with its own specialized role, are working together at an enormous scale to create what we now recognize as modern AI.

This brings us to the many branches and sub-branches of AI, each specializing in different problem-solving approaches:

- Expert Systems – Rule-based programs used in decision-making for specific tasks based on predefined if-then rules and structured knowledge bases resulting in Symbolic reasoning (logical, rule-based decision-making).

- Fuzzy Logic – A method that helps AI handle reasoning with approximate or uncertain information allowing AI to make sense of imprecise or incomplete data. It is a rule-based system that deals with uncertainty by applying degrees of truth allowing it to have Approximate reasoning (handling uncertainty and partial truths).

- Machine Learning – A method to analyze data by automatically building models from large datasets instead of relying solely on explicit rules and logic; it learns patterns from the data itself and can continue to evolve. It does this via:

- Supervised Learning (training with labeled data)

- Unsupervised Learning (finding hidden patterns in unlabeled data)

- Reinforcement Learning (learning through rewards and penalties)

- Deep Learning – A subset of machine learning that uses multi-layered neural networks to learn from vast amounts of data increasing pattern recognition and replacing earlier rule-based methods. With neural networks being the backbone of deep learning, structured to mimic the way the human brain processes information.

- Natural Language Processing (NLP) – Allows machines to read, understand, and generate human language.

- Computer Vision – Enabling machines to interpret and process visual information from images or videos.

Another branch of AI that is less pertinent to this discussion is Robotics.

The Shift from Cognitive Computing to LLMs

In 2011, IBM heavily promoted Cognitive Computing with IBM Watson, which demonstrated AI’s ability to understand and process natural language by competing on Jeopardy!. At the time, Watson relied on NLP, machine learning, and deep learning to simulate human-like reasoning, and “Cognitive Computing” became the buzzword IBM used to market this approach.

However, today, the AI landscape has shifted. Instead of focusing on Cognitive Computing, the buzzword has pivoted to LLMs. While both Cognitive Computing and LLMs use NLP, machine learning, and deep learning, their underlying architectures are different. The difference? Transformer-based models (like OpenAI’s GPT and Google’s BERT) have made AI vastly more capable, scalable, and accessible than what IBM Watson represented in 2011.

Why AI Has Exploded in the 2020s

AI has existed for decades, but for many, it feels like an overnight explosion. Why? A combination of key breakthroughs—such as Transformer architectures, increased computational power, and vast datasets—have fueled the rise of Large Language Models (LLMs), making AI more accessible than ever before. And this momentum shows no signs of slowing down. There isn’t a single breakthrough but a combination of factors:

- The Rise of Deep Learning & Neural Networks Advancing NLP – Before deep learning, Natural Language Processing (NLP) relied on rule-based methods and statistical models, such as Hidden Markov Models (HMMs), Naïve Bayes, and n-gram models. These approaches had significant limitations, as they lacked contextual awareness.

Before Deep Learning used Transformer Models, NLP use of Deep Learning relied on Recurrent Neural Networks (RNNs) and Long Short-Term Memory networks (LSTMs), which improved sequential text processing but struggled with long-range dependencies. These challenges led to the development of Transformer architectures, which revolutionized NLP by eliminating the bottleneck of sequential text processing. Unlike RNNs and LSTMs, which process text one word at a time, Transformers allow models to analyze entire sequences in parallel, dramatically improving AI’s ability to understand and generate human-like text. - The Shift to Transformer-Based Models – The introduction of transformer architectures, particularly with OpenAI’s GPT models and Google’s BERT, revolutionized AI’s ability to understand and generate language. Transformer-Based models process words in context rather than just sequentially, dramatically improving AI’s capabilities.

- LLMs Use Probabilistic & Neural Network-Based Processing – Unlike earlier AI models that relied on rule-based decision-making (such as Expert Systems or Fuzzy Logic), Large Language Models (LLMs) generate responses based on probability distributions learned from massive datasets, making their outputs far more flexible and scalable.

- Computer Vision Advancement – Early approaches used handcrafted feature extraction methods (e.g., SIFT, HOG, Haar Cascades) and were often combined with traditional machine learning techniques like Support Vector Machines (SVMs). While effective for specific tasks, these methods struggled to generalize.The 2010s marked a turning point, as Convolutional Neural Networks (CNNs) revolutionized Computer Vision, allowing models to automatically learn and extract features from images without manual engineering. Since 2020, Vision Transformers (ViTs) have further advanced Computer Vision by enabling image processing through self-attention mechanisms rather than convolutional filters.

- Ease of Access: AI Is Everywhere Now – In 2011, if you wanted to experience AI, you had to either watch Jeopardy! to see IBM Watson in action or be an IBM engineer working on it. AI was a corporate-level innovation, inaccessible to the general public.

Today? AI is everywhere. Since 2023, Bing integrated GPT-4, and in 2024, Google enhanced its search with Gemini-powered AI autocomplete and ranking algorithms.

The release of ChatGPT-4 in March 2023 marked a turning point in the accessibility of AI-powered conversational models, making advanced AI capabilities available to businesses and the public at an unprecedented scale.Want AI in your business product? You don’t need IBM’s hardware—you can just call an API. Cloud-based AI services from OpenAI, Google, and Microsoft make it possible for companies and individuals to integrate AI into their products without needing expensive infrastructure.

Are Rule-Based Systems Obsolete?

Unlike earlier AI approaches that relied on Fuzzy Logic and Expert Systems, modern AI models like ChatGPT have primarily moved away from these rule-based techniques, instead leveraging deep learning, probabilistic methods, and massive data-driven architectures.

Companies may still use Expert Systems and Fuzzy Logic Alongside LLMs – While OpenAI’s ChatGPT and similar models do not use Fuzzy Logic or Expert Systems internally, companies integrating these AI models often incorporate them into larger rule-based frameworks. For example, an Expert System might validate AI-generated legal advice, or a Fuzzy Logic system might refine AI-powered decision-making in industrial automation. This demonstrates that Expert Systems and Fuzzy Logic have not disappeared; rather, they are evolving alongside modern AI architectures.

Final Thoughts

As AI continues to evolve, new buzzwords will keep emerging, each representing the next step in the long journey of technological progress—some fading into history, others shaping the future. At its core, AI is what it has always been—a set of evolving tools designed to tackle increasingly complex challenges. As AI models grow larger and more capable, the next frontier is already emerging multimodal AI—where models are no longer confined to a single modality but can seamlessly integrate text, vision, and speech. Beyond that, advancements in agent-based reasoning and self-improving AI systems will continue to redefine the future of artificial intelligence. Which looks to be headed towards Artificial General Intelligence (AGI) instead of just Artificial Intelligence (AI).

While researching this topic, I came across a lot of interesting material and figured I’d share. You may find these articles insightful:

- For a historical overview of AI’s evolution, see IBM’s History of AI.

- For a deeper look into deep learning and neural networks, see DATAVERSITY’s history of deep learning.

- For an in-depth annotated history of modern AI, including neural networks and deep learning, see Juergen Schmidhuber’s paper.

- For a timeline of ChatGPT’s development and release dates, see Search Engine Journal’s history of ChatGPT.

- For an overview of recent advancements in AI and machine learning, see Case Western Reserve University’s report.